The Simplicity Trap: When a Simple Site Demands a Complex CI/CD

kosar

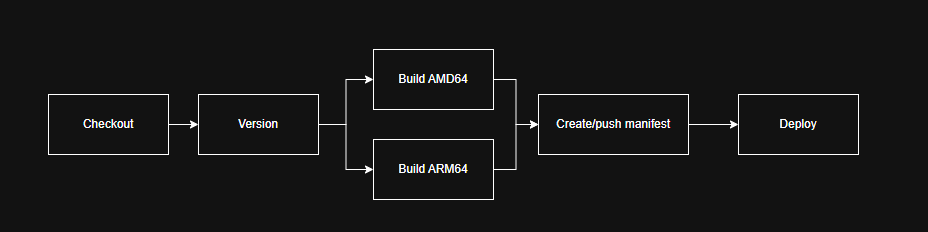

Yes, the title isn't clickbait. Just for context: we went from this:

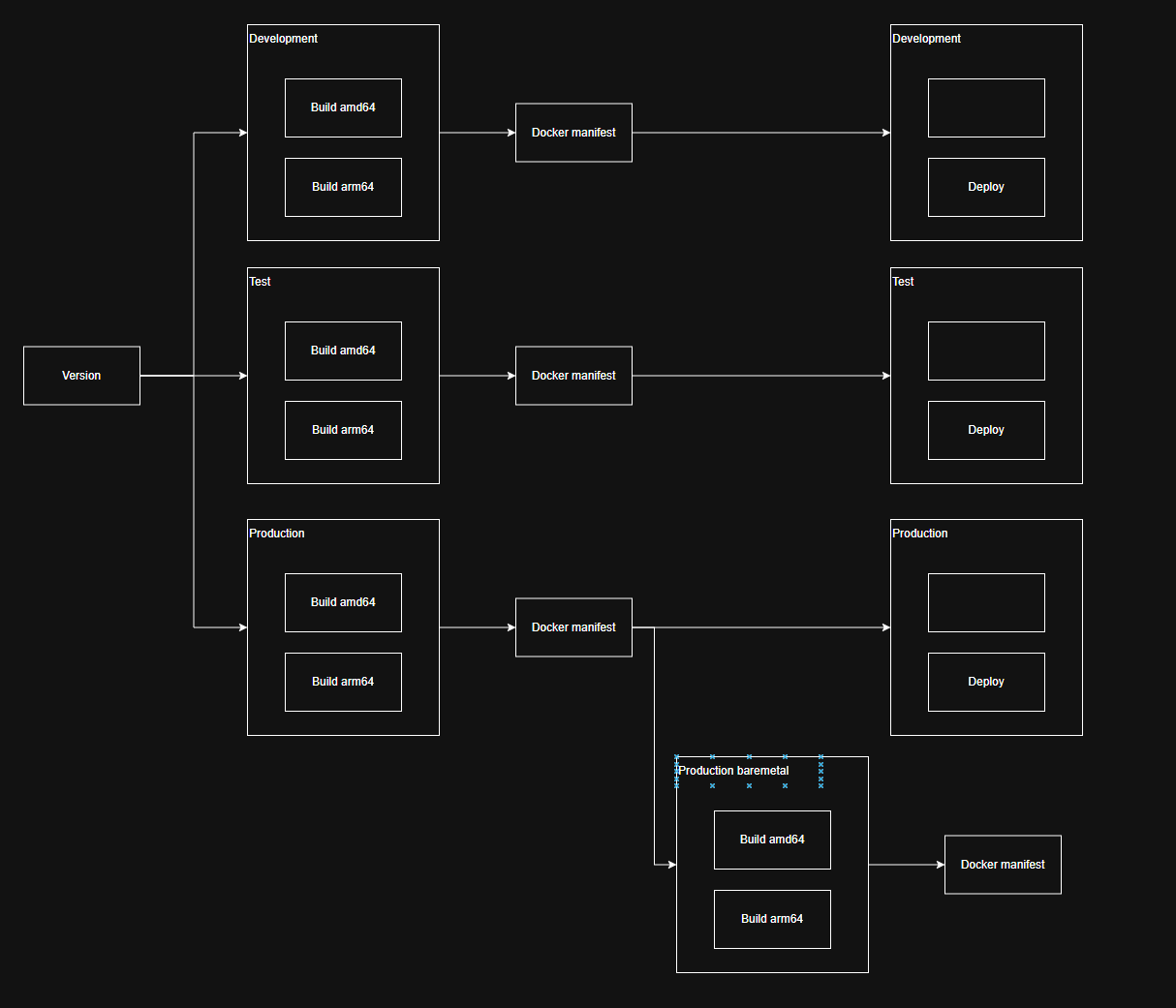

to this:

And this is just for a regular React service that compiles to static files.

It's quite difficult to describe the process without revealing project details that are under NDA, but I'll try. It all started with a perfectly normal scenario. There were several services. API, another API, another API, another API... authentication, cartography, navigation, WebRTC and video streams. But that's not what this is about. Right now we're talking specifically about a regular frontend service that simply compiles to static files and is served by nginx. All classic. Dockerfile, npm install, npm run build, and FROM nginx:stable. Nothing unusual. Except for one thing: focus on offline bare-metal environment that shouldn’t cost an arm and a leg.

So where do these complexities come from, and why is this a trap? Well, first of all, let's talk about optimization. This is what was initially built into the project. Most of us have probably seen applications that consume gigabytes of RAM, yet all they offer is two pages and three forms? That's exactly the scenario we didn't want. So, developers heavily optimized the code, and used nginx as the web server instead of a node process. Compiling to static files in this case brings a lot of benefits. So we ended up with roughly the following Dockerfile:

FROM nginx:stable AS base

WORKDIR /var/www/html

FROM node:18.20.4 AS build

WORKDIR /app

COPY package.json package-lock.json ./

RUN npm ci

COPY . .

RUN npm run build

FROM base AS final

COPY --from=build /app/dist /var/www/htmlAnd a regular GitHub Actions pipeline consisting of the usual steps: checkout, docker login, docker build/push, deploy k8s. Since we needed to support 3 environments (dev, test, and prod), there were also 3 pipelines. No complications.

However, the project became more complex, code was optimized, security was improved. Build arguments became unavoidable. Separate API URLs for each environment, own authentication servers, debug mode for development. And at the same time, we wanted to avoid code duplication. Around the same time, the requirement surfaced that the project should be able to work completely offline. So we arrived at matrix builds. The Dockerfile grew arguments that were set in the pipeline, and the pipeline itself transformed. Around the same time, we thought about versioning, which we quickly implemented as date and commit hash. By the way, this versioning is quite universal and still lives on all services to this day. Development and Test remained regular pipelines, but Production already had to be built for both cloud and baremetal versions. Differences in the baremetal version:

- completely offline operation

- own CA and certificates

- some individual configurations

Thus, for Production, the build stage transformed into something like this:

build-production:

runs-on: ubuntu-latest

if: ${{ github.ref == 'refs/heads/main' }}

strategy:

matrix:

include:

- image_tag: ${{ needs.bump-version.outputs.version }}

arg1: foo

arg2: bar

- image_tag: ${{ needs.bump-version.outputs.version }}-bm

arg1: baz

arg2: qux

needs: [bump-version]

steps:

- uses: actions/checkout@v3

- name: Login into ACR

uses: azure/docker-login@v1

with:

login-server: ${{ secrets.REGISTRY_URL }}

username: ${{ secrets.REGISTRY_USERNAME }}

password: ${{ secrets.REGISTRY_PASSWORD }}

- name: Build and push docker containers

uses: docker/build-push-action@v2

with:

build-args: |

ARG1=${{ matrix.arg1 }}

ARG2=${{ matrix.arg2 }}

context: ${{ env.GITHUB_WORKSPACE }}

file: ${{ env.DOCKER_FILE }}

platforms: linux/amd64

push: true

tags: |

${{ env.IMAGE_NAME }}:${{ matrix.image_tag }}Then, cloud providers started adding ARM64 support, which became an excellent optimization opportunity for us. Building code for multiple platforms - no problem (yeah, right). ARM64 not only gives a performance boost "on paper", but also lower costs in real life. On average, 25-35% per one k8s node. And for baremetal, there's the opportunity to use cheaper Raspberry Pi 5 compared to the amd64 hardware we were used at that time. And the first thing I did was simply add qemu, buildx, and another platform to the docker build in the pipeline. Path of least resistance.

...

- name: Set up QEMU

uses: docker/setup-qemu-action@v2

- name: Set up docker context for buildx

run: docker context create builder

- name: Set up Docker Buildx

id: buildx

uses: docker/setup-buildx-action@v2

with:

version: latest

endpoint: builder

- name: Build and push docker containers

uses: docker/build-push-action@v2

with:

build-args: |

ARG1=${{ matrix.arg1 }}

ARG2=${{ matrix.arg2 }}

context: ${{ env.GITHUB_WORKSPACE }}

file: ${{ env.DOCKER_FILE }}

platforms: linux/amd64, linux/arm64

push: true

tags: |

${{ env.IMAGE_NAME }}:${{ matrix.image_tag }}This became the very moment I called the simplicity trap. First, the variability that spawned not just a linear pipeline, but builds with individual parameters for each environment, plus an additional platform. Second - build time suddenly increased to 100-110 minutes. At that time, ARM servers were just starting to appear, and there weren't yet posts about buildx and qemu problems. There was just an understanding that cross-compilation time for arm64 on amd64 exceeded all conceivable limits. What did we do? Nothing. Production releases weren't frequent at this stage of the project anyway, and baremetal environment was only needed for demos. So we left everything as is, and I just left the project for about 2 years.

What changed over that time? The project moved into a more active phase, releases to production became more frequent, and no one wanted to put up with an almost two-hour build. buildx and qemu were still slowly building code, so I had a thought: what if we remove the emulation? ARM64 nodes have long existed in clouds, maybe Microsoft finally added hosted agents on ARM64? And yes, Microsoft lived up to my expectations and did NOT add ARM64 agents. Thanks for the stability! (here should be a "sarcasm" placard).

Well, I mean... They added macos-latest, which is on ARM64. But it doesn't support docker. Okay, the solution - buying a virtual machine on ARM64 in Azure, or in some cheaper cloud, and setting up a GitHub Actions runner there.

And then the fun began. The number of variables and variability became so large that describing the matrix for building at some point exceeded 200 lines, and even then it wasn't complete. We needed to describe the following:

- build arguments for dev, test, prod, and prod-baremetal environments

- the agent on which the build will happen (amd64 or arm64)

- tie the build platform to the runner platform

Doesn't seem like much? Try doing something like that, and do it so that you use ${{ matrix.value }} everywhere, and you'll understand what I'm talking about. Let me remind you that after building for separate platforms, you need to add another step for

building a universal docker manifest that will describe both platforms. At this stage, I had roughly the following scheme in my head:

But it was based on matrix builds, and accordingly, it didn't work. Then I decided to try reusable templates. There will be a matrix there too, by the way, but the simplest one, which will just "nail" the build for a specific platform to a specific type of runner using tags.

So I ended up with roughly the following template that I could reuse:

name: Build

env:

IMAGE_NAME: docker.io/acme/service

REGISTRY_URL: docker.io

REGISTRY_USERNAME: acme

DOCKER_FILE: docker/Dockerfile

CONTEXT: .

PLATFORMS: amd64,arm64

on:

workflow_call:

inputs:

version:

required: true

type: string

args_arg1:

required: true

type: string

args_arg2:

required: true

type: string

secrets:

REGISTRY_PASSWORD:

required: true

outputs:

image_tag_common:

description: "The common image tag without platform suffix"

value: ${{ jobs.manifest.outputs.image_tag_common }}

jobs:

build:

strategy:

matrix:

include:

- platform: amd64

runner_os: ubuntu-latest

- platform: arm64

runner_os: ARM64

runs-on: ${{ matrix.runner_os }}

steps:

- uses: actions/checkout@v4

- name: Login into ACR

uses: azure/docker-login@v2

with:

login-server: ${{ env.REGISTRY_URL }}

username: ${{ env.REGISTRY_USERNAME }}

password: ${{ secrets.REGISTRY_PASSWORD }}

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

with:

version: latest

use: true

- name: Build and push docker containers

uses: docker/build-push-action@v6

with:

build-args: |

ARG1=${{ inputs.args_arg1}}

ARG2=${{ inputs.args_arg2 }}

context: ${{ env.CONTEXT }}

file: ${{ env.DOCKER_FILE }}

platforms: linux/${{ matrix.platform }}

push: true

tags: |

${{ env.IMAGE_NAME }}:${{ matrix.platform }}-${{ inputs.version }}

manifest:

runs-on: ubuntu-latest

needs: [build]

outputs:

image_tag_common: ${{ steps.set_outputs.outputs.image_tag_common }}

steps:

- name: Login into ACR

uses: azure/docker-login@v2

with:

login-server: ${{ env.REGISTRY_URL }}

username: ${{ env.REGISTRY_USERNAME }}

password: ${{ secrets.REGISTRY_PASSWORD }}

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

with:

version: latest

use: true

- name: Create multi-arch manifest

run: |

echo "Creating multi-arch manifest for version: ${{ inputs.version }}"

cmd="docker buildx imagetools create --tag ${{ env.IMAGE_NAME }}:${{ inputs.version }}"

platforms="${{ env.PLATFORMS }}"

echo "Platforms: $platforms"

IFS=',' read -ra PLATFORM_ARRAY <<< "$platforms"

for platform in "${PLATFORM_ARRAY[@]}"; do

image_tag="${{ env.IMAGE_NAME }}:$platform-${{ inputs.version }}"

echo "Adding platform image: $image_tag"

cmd="$cmd $image_tag"

done

echo "Executing: $cmd"

eval "$cmd"

- name: Set outputs

id: set_outputs

run: |

echo "Image tag common: ${{ env.IMAGE_NAME }}:${{ inputs.version }}"

echo "image_tag_common=${{ env.IMAGE_NAME }}:${{ inputs.version }}" >> $GITHUB_OUTPUTAre there workarounds here? Absolutely. Does this work? 100%. Did this solve the long build problem? Definitely. Build time decreased from 100-110 minutes to 5-7. The build scheme started looking like this:

Production baremetal is specifically built after Production. The ARM64 runner is still just one, and we don't want to slow down production deployment by waiting for two different prod versions to build. Better to explicitly put it last in the queue in this case.

Besides the build, I also made a reusable template for deployment, because although k8s is used everywhere, deployment to different environments also has some variability. I won't describe the deployment template here, but I'll draw attention to one amusing moment.

Here's part of the root pipeline that calls the reusable templates:

build-production:

uses: ./.github/workflows/build.yml

needs: [version]

if: ${{ github.ref == 'refs/heads/main' }}

with:

version: ${{ needs.version.outputs.version }}

args_arg1: foo

args_arg2: bar

secrets:

REGISTRY_PASSWORD: ${{ secrets.REGISTRY_PASSWORD }}

deploy-production:

uses: ./.github/workflows/deploy.yml

needs: [build-production]

with:

image: ${{ needs.build-production.outputs.image_tag_common }}

environment: production

secrets:

AZURE_CREDENTIALS: ${{ secrets.AZURE_CREDENTIALS }}What could have gone wrong here? Nothing, everything should work... But, wait. Why is an empty value being passed to the deploy image parameter? It's definitely present during the build stage and is set correctly.

https://github.com/orgs/community/discussions/37942. Ah, that's what it is, thanks, GitHub. That's it.

Looking at semantic-release.