Aged Relic CI/CD Process Update: Preparation and Planning

kosar

In 2020, it’s nearly impossible to find a project stack description without at least one of the following words: IaC, microservices, Kubernetes, Docker, AWS/Azure/GCloud, blockchain, ML, VR and so on. And that’s fantastic! Progress doesn’t stand still. We’re growing, and with us, our projects grow too, along with more convenient and functional tools that solve modern problems.

Hi there. That’s how I originally wanted to start this article. But then I rethought some things, talked with colleagues, and realized I would’ve been wrong. There are still projects out there that are over 15 years old, with managers and team members who are stuck in the past, and these projects naturally come with an outdated technology stack that is difficult to maintain in the current tech jungle. And for various reasons, it’s just not feasible to overhaul the whole project (the client’s old-school, there’s no approval, the project is massive, migration is dragging on, or everyone’s simply content with it), so we end up maintaining it. Even worse is when a relic like this is still actively developed. It’s like a snowball effect. The client and the public demand features, the code needs to be delivered, servers require care and feeding… and Bitbucket... well, Bitbucket dropped Mercurial support. This is precisely the kind of case we’re dealing with.

Here's what we’ll cover: converting Mercurial to Git, migrating CI from CruiseControl.NET to TeamCity, and switching from git-deployment to Octopus with a small overview of the entire process.

There’s a lot of text, so we’ll break it into parts for easier digestion. Contents:

- Part 1: What we have, why it’s a problem, planning, and a bit of bash.

- Part 2: TeamCity.

- Part 3: Octopus Deploy.

- Part 4: Behind the scenes. Pain points, future plans, and perhaps an FAQ. Semi-technical.

I wouldn’t call this a guide to follow for many reasons: limited immersion in the project due to time constraints, not enough experience with these kinds of things at this moment, an enormous monolith where all subprojects are tightly intertwined, and a ton of nuances that make you burn, wonder, and just cope with them, but never truly delight in them. Also, because the project is unique in certain aspects, some steps are tailored specifically to this case.

The classic introduction

We had a Mercurial repository, 300+ (open!) branches, ccnet, another ccnet + git (for deployments), and a vast array of project modules, each with its own config and separate clients, four environments, a mountain of IIS pools, as well as cmd scripts, SQL, over five hundred domains, five dozen builds, and active development to top it off. Not that all of this was necessary, but it worked—clunky and slow. My biggest concern was the existence of other projects needing my attention. There’s nothing more dangerous in tasks of this scale than a lack of concentration and constant interruptions.

I knew that sooner or later, I’d have to focus on other tasks, so I spent a massive amount of time studying the existing infrastructure, so at least I wouldn’t face unsolvable questions about it later on.

Unfortunately, I can’t give a complete description of the project due to confidentiality, so we’ll stick to general technical details. Any names related to the project are blacked out in the screenshots - sorry about the black blotches.

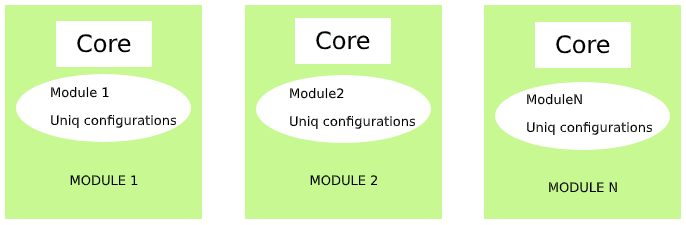

A key feature of this project is that it has several modules with a common core but different configurations. There are also modules with a "special" approach, aimed at resellers and large clients. One module can serve more than one client. By “client,” I mean a separate organization or group of people who get access to a specific module. Each client has their own domain, their own design, and their unique configurations. Client identification is based on the domain they use.

Here’s a diagram of this part of the project:

As you can see, the core is the same everywhere, which we’ll be able to leverage.

Reasons for re-evaluating and updating the CI/CD processes:

- CruiseControl.NET was used as the build system, which is generally a dubious pleasure to work with. XML configs spanning multiple screens, a mess of dependencies and config linkages, and a lack of modern solutions for today’s problems.

- Some developers (mainly leads) on the project needed access to the build servers and sometimes liked to change ccnet configs they really shouldn’t be touching. You can guess what happens next. There needs to be simple, user-friendly permissions management in the CI system without stripping developers of server access. In short, without a config file, there’s nowhere for itchy fingers to meddle.

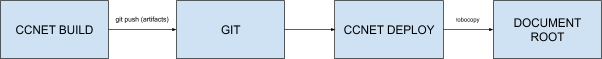

- As a deployment system, we used… also CCNET, but on the client side with git. The build and code delivery process looked something like this.

- Experimentally, we found that maintaining this particular system was no walk in the park. It consumed a lot of time - a luxury we couldn’t afford.

- Build configurations were stored on a shared server with other projects. As this project grew, it was decided to allocate separate services (build and deployment systems) for it on the customer’s servers.

- Lack of visibility and centralization. Which module versions are deployed on which server? What’s the average update frequency? How current is a particular module? The answers to these and many other questions weren’t easy to find.

- Inefficient use of resources and an outdated build and code delivery process.

In the planning phase, we decided to use TeamCity as the build system and Octopus as the deployment system. The client’s hardware infrastructure remained unchanged: separate dedicated servers for dev, test, staging, and production, as well as reseller servers (mostly production environments).

A Proof of Concept was provided to the client based on one of the modules, an action plan was drafted, and preliminary work was carried out (installation, configuration, etc.). No need to go into detail here - how to install TeamCity can be found on the official website. I’ll just highlight some of the requirements we formulated for the new system:

- Ease of maintenance with all that implies (backups, updates, troubleshooting if problems arise).

- Versatility. Ideally, to develop a common scheme for building and delivering all modules and use it as a template.

- Minimize the time spent on adding new build configurations and maintenance. Clients come and go, and sometimes new setups are needed. When creating a new module, there should be no delay in setting up its delivery.

Around this time, we recalled Bitbucket dropping support for Mercurial repositories, adding the requirement to migrate the repository to Git while preserving branches and commit history.

Preparation: repository conversion

Surely, someone out there must have tackled this task before us. We just need to find a ready-made solution. "Fast Export" turned out to be not so fast, and it didn’t work. Unfortunately, the error logs and screenshots are lost, but it just didn’t work. Bitbucket doesn’t provide its own converter (though it could have). A few more methods we googled also missed the mark. So, I decided to write my own scripts - after all, this wasn’t the only one Mercurial repository, and they might come in handy in the future. Here are the early scripts (they’re still the same today). The logic roughly follows:

- Use the Mercurial

hggitextension as a base.

cd /path/to/hg/repo

cat << EOF >> ./.hg/hgrc

[extensions]

hggit=

EOF- Get a list of all branches in the Mercurial repository.

hg branches > ../branches- Rename branches (thanks, Mercurial devs, for allowing spaces in branch names, and special thanks for umlauts and other symbols that add joy to life). Sarcasm detected.

#!/usr/bin/env bash

hgBranchList="./branches"

sed -i 's/:.*//g' ${hgBranchList}

symbolsToDelete=$(awk '{print $NF}' FS=" " ${hgBranchList} > sym_to_del.tmp)

i=0

while read hgBranch; do

hgBranches[$i]=${hgBranch}

i=$((i+1))

done <${hgBranchList}

i=0

while read str; do

strToDel[$i]=${str}

i=$((i+1))

done < ./sym_to_del.tmp

for i in ${!strToDel[@]}

do

echo ${hgBranches[$i]} | sed "s/${strToDel[$i]}//" >> result.tmp

done

sed -i 's/[ \t]*$//' result.tmp

sed 's/^/"/g' result.tmp > branches_hg

sed -i 's/$/"/g' branches_hg

sed 's/ /-/g' result.tmp > branches_git

sed -i 's/-\/-/\//g' branches_git

sed -i 's/-\//\//g' branches_git

sed -i 's/\/-/\//g' branches_git

sed -i 's/---/-/g' branches_git

sed -i 's/--/-/g' branches_git

rm sym_to_del.tmp

rm result.tmp- Create bookmarks (hg bookmark) and push them to an intermediate repository. Why? Because it’s Bitbucket, and you can’t create a bookmark with the same name as a branch (like “staging”).

#!/usr/bin/env bash

gitBranchList="./branches_git"

hgBranchList="./branches_hg"

hgRepo="/repos/reponame"

i=0

while read hgBranch; do

hgBranches[$i]=${hgBranch}

i=$((i+1))

done <${hgBranchList}

i=0

while read gitBranch; do

gitBranches[$i]=${gitBranch}

i=$((i+1))

done <${gitBranchList}

cd ${hgRepo}

for i in ${!gitBranches[@]}

do

hg bookmark -r "${hgBranches[$i]}" "${gitBranches[$i]}-cnv"

done

hg push git+ssh://[email protected]:username/reponame-temp.git

echo "Done."- In the new (Git) repository, remove the branch name suffix and migrate hgignore to gitignore.

#!/bin/bash

# clone repo, run git branch -a, delete remotes/origin words to leave only branch names, delete -cnv postfix, delete default branch string because we can't delete it

repo="/repos/repo"

gitBranchList="./branches_git"

defaultBranch="default-cnv"

while read gitBranch; do gitBranches[$i]=${gitBranch}; i=$((i+1)); done < $gitBranchList

cd $repo

for i in ${!gitBranches[@]}; do git checkout ${gitBranches[$i]}-cnv; done

git checkout $defaultBranch

for i in ${!gitBranches[@]}; do

git branch -m ${gitBranches[$i]}-cnv ${gitBranches[$i]}

git push origin :${gitBranches[$i]}-cnv ${gitBranches[$i]}

git push origin -u ${gitBranches[$i]}

done- Push to the main repository.

#!/bin/bash

# clone repo, run git branch -a, delete remotes/origin words to leave only branch names, delete -cnv postfix, delete default branch string because we can't delete it

repo="/repos/repo"

gitBranchList="./branches_git"

defaultBranch="default"

while read gitBranch; do gitBranches[$i]=${gitBranch}; i=$((i+1)); done < $gitBranchList

cd $repo

for i in ${!gitBranches[@]}; do

git checkout ${gitBranches[$i]}

sed -i '1d' .hgignore

mv .hgignore .gitignore

git add .

git commit -m "Migrated ignore file"

doneLet me explain some of these steps, especially the use of the intermediate repository. Initially, branch names in the converted repository have a “-cnv” suffix. This is due to how hg bookmark works. This suffix has to be removed, and hgignore files have to be converted to gitignore. All of this adds to the history, unnecessarily inflating the repository size. Here’s another example: try creating a repository and pushing a 300MB binary as the first commit. Then add it to gitignore and push without it. It remains in the history. Now try deleting it from the history (git filter-branch). With a certain number of commits, the resulting repo size won’t shrink—it will grow. This can be resolved with optimization, but Bitbucket won’t trigger it - it only happens during repo import. So all draft operations are performed with the intermediate, and then it’s imported into the new one. The final size of the intermediate repo was 1.15GB, while the resulting one was 350MB.

Conclusion

The migration process was broken down into several stages:

- Preparation (demo for the client using one build, software installation, repository conversion, updating existing ccnet configs);

- CI/CD setup for the dev environment and testing (the old system remains operational in parallel);

- CI/CD setup for the remaining environments (alongside the existing "stuff") and testing;

- Migration of dev, staging, and test;

- Production migration and transferring domains from the old IIS instances to the new ones.

At the moment, the description of the successfully completed preparation stage is wrapping up. No monumental successes were achieved here, but the existing system didn’t break, and the Mercurial repository was migrated to Git. However, without documenting these processes, there would be no context for the more technical parts. In the next part, we’ll cover setting up the CI system with TeamCity.